The busy lecturer’s guide to LLMs

28 February, 2024

Take-home messages

- Explore LLMs firsthand to understand their strengths and weaknesses.

- Combine domain knowledge with an understanding of how LLMs work, and effective prompting strategies.

- Integrate LLMs into teaching to foster AI literacy among students.

- Critically evaluate an LLM’s output. They are language models, not knowledge bases.

- Keep a human in the loop.

Assistant menagerie

| Assistant | Provider | Privacy | LLM | Capabilities | Pricing model |

|---|---|---|---|---|---|

| ChatGPT | OpenAI | 👎🏼 | GPT-3.5, GPT-4 | Web search, DALLE, GPTs, multimodal input | 💶 |

| Copilot | Microsoft | 👍🏼 | GPT-3.5, GPT-4 | Web search, DALLE, multimodal input | 🆓 for BFH employees and students |

| Gemini | 👎🏼 | Gemini Ultra, Gemini Pro, and Gemini Nano | Web search, multimodal input | 💶 | |

| HuggingChat | 🤗 Hugging Face | 👍🏼 | Various open models, e.g. CodeLlama, Llama 2, Mistral, Gemma | 🆓 |

Training

How to train a language model

Example

Generalization

- The ability to apply knowledge to new, unseen data/situations

- E.g. a language model should learn to generate rhymes

- Extracts knowledge from text: linguistic, factual, commonsense, etc.

What is learned?

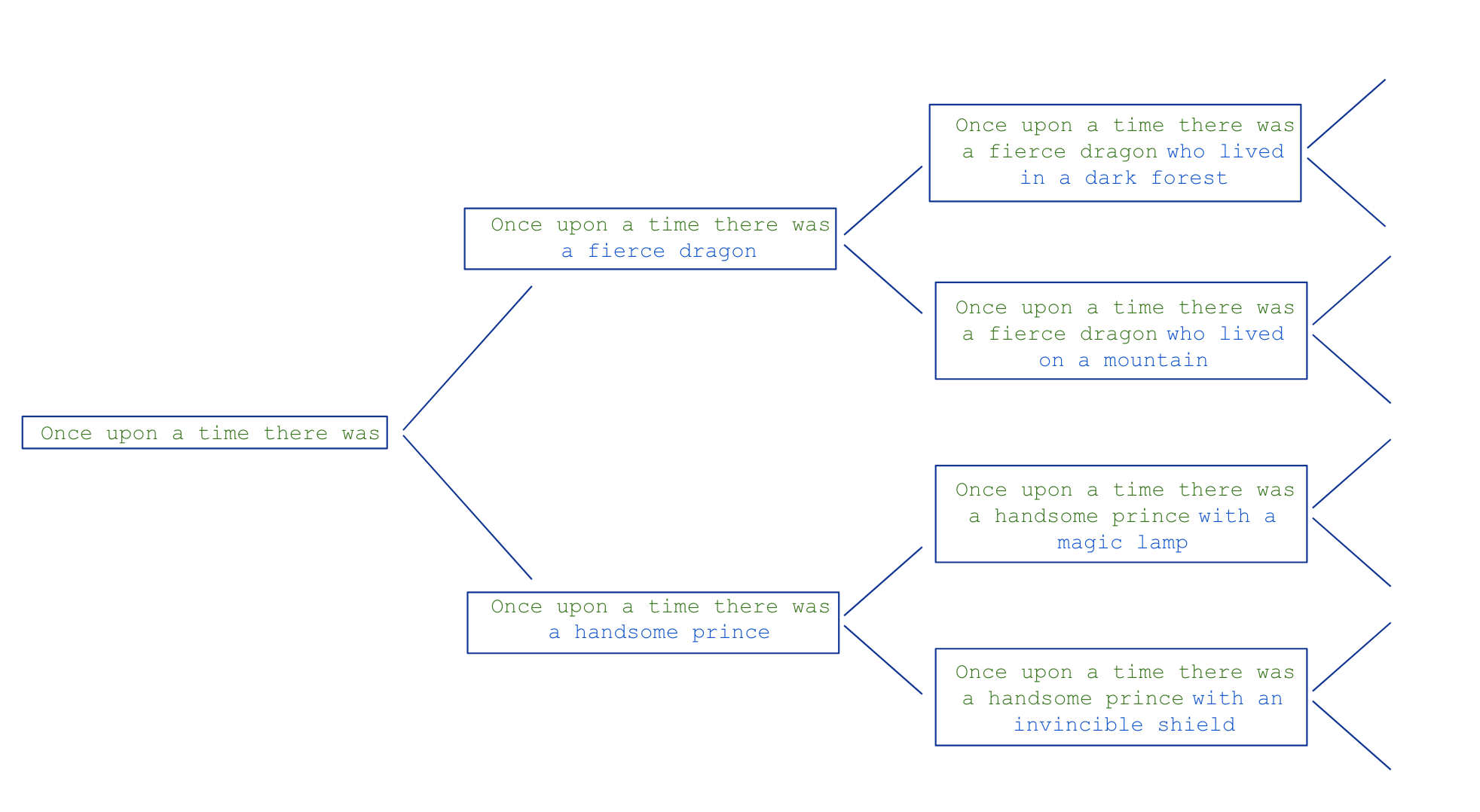

- An LLM learns to predict the next word in a sequence, given the previous words: \[ P(word | context) \]

- Think of as “fancy autocomplete” (but very very powerful and sopisticated)

Text Generation

How does an LLM generate text?

Sampling

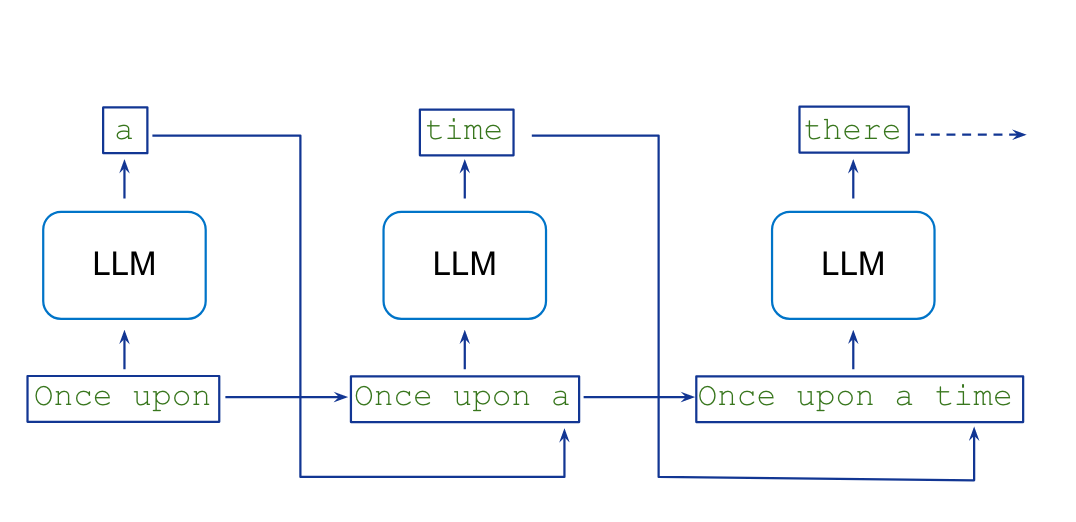

Auto-regressive generation

Auto-regressive generation

- Text is generated one word at a time (actually tokens, not words).

- Model predicts which token is likely to follow, given a sequence of tokens (words, punctuation, emojis, etc.).

- Each new token is added to the sequence of tokens that the model uses to predict the next token.

\[ P(w_{w+1} | w_1, w_2, ..., w_t) \]

- Sequence of words is called the context.

Generated text is dependent on the context.

Every token is given an equal amount time (computation per token is constant).

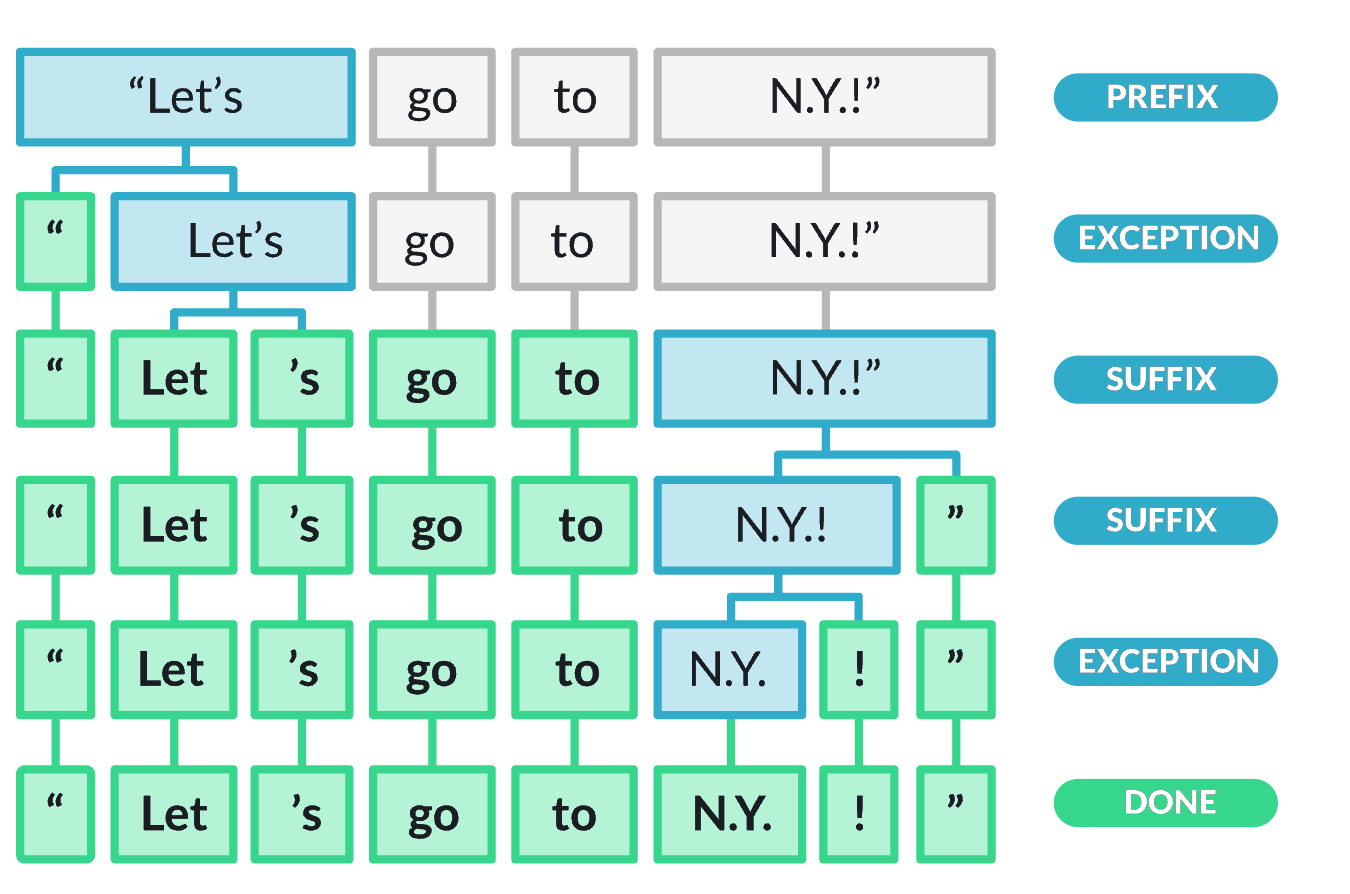

Tokenization

LLMs operate with tokens, not words. These are sub-words, and make working with text much easier for the model. A rule of thumb is that one token generally corresponds to ~4 characters of English text. This translates to roughly \(\frac{3}{4}\) of a word (so 100 tokens is about 75 words).

Feel free to try out the OpenAI tokenizer.

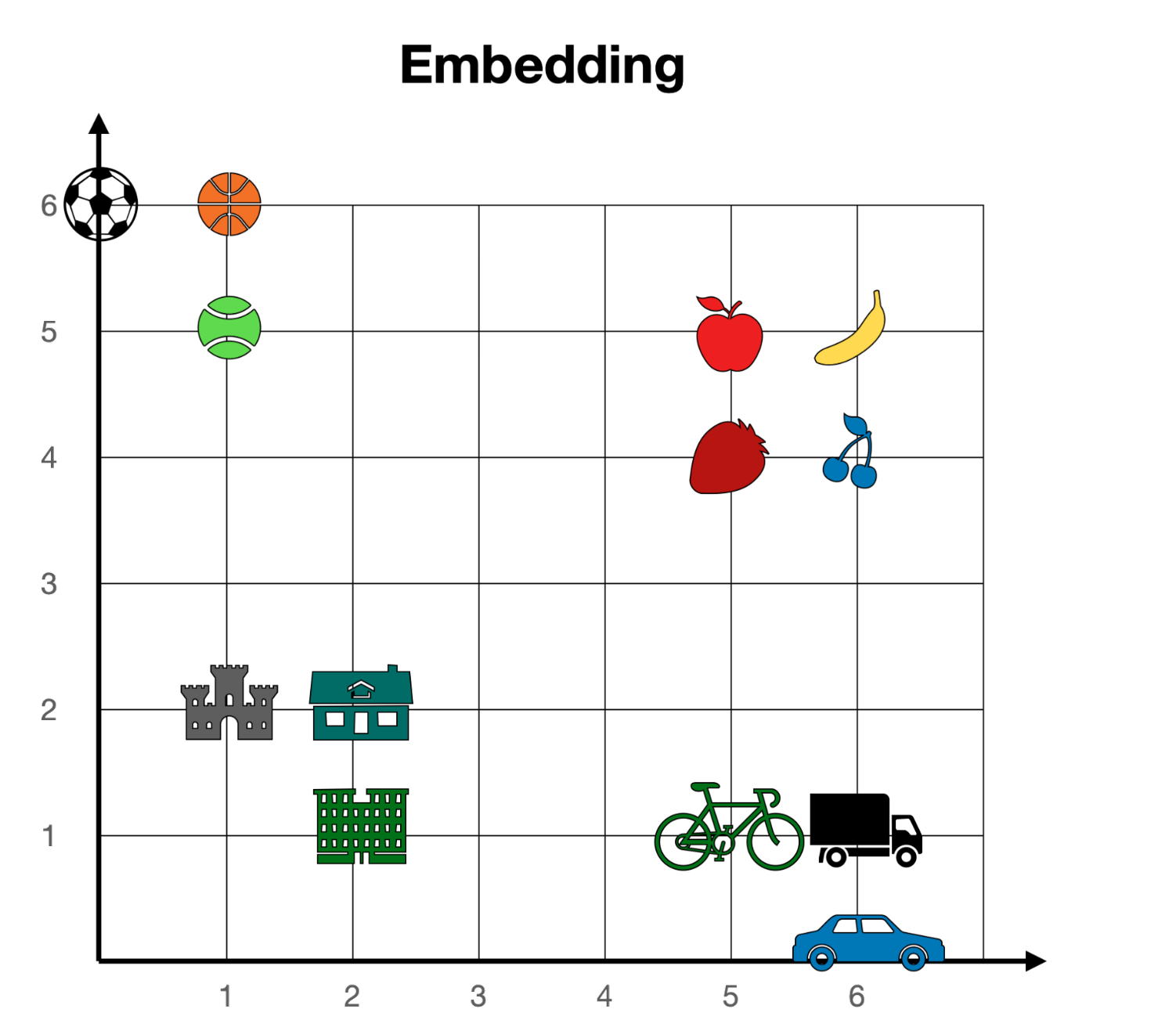

Embeddings

- The next step is to represent tokens as vectors.

- This is called “embedding” the tokens. The vectors are high-dimensional, and the distance between vectors measures the similarity between tokens.

In this 2-dimensional representation, concepts that are “related” lie close together. Read about embeddings in this tutorial.

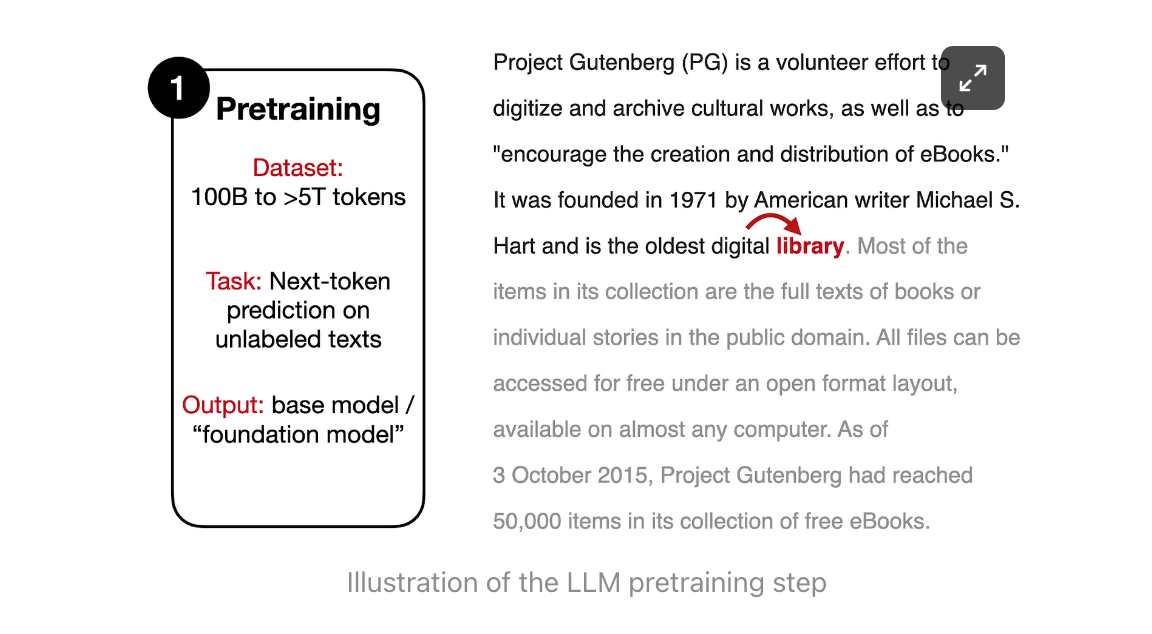

Foundation models

A foundation model, or large language model (LLM):

- is a type of machine learning model that is trained to predict the next word following the input (prompt).

- is trained “simply” to predict the next word following a sequence of words.

- does not necessarily produce human-like conversations.

: What is the capital of France?

: What is the capital of Germany? What is the capital of Italy? . ..

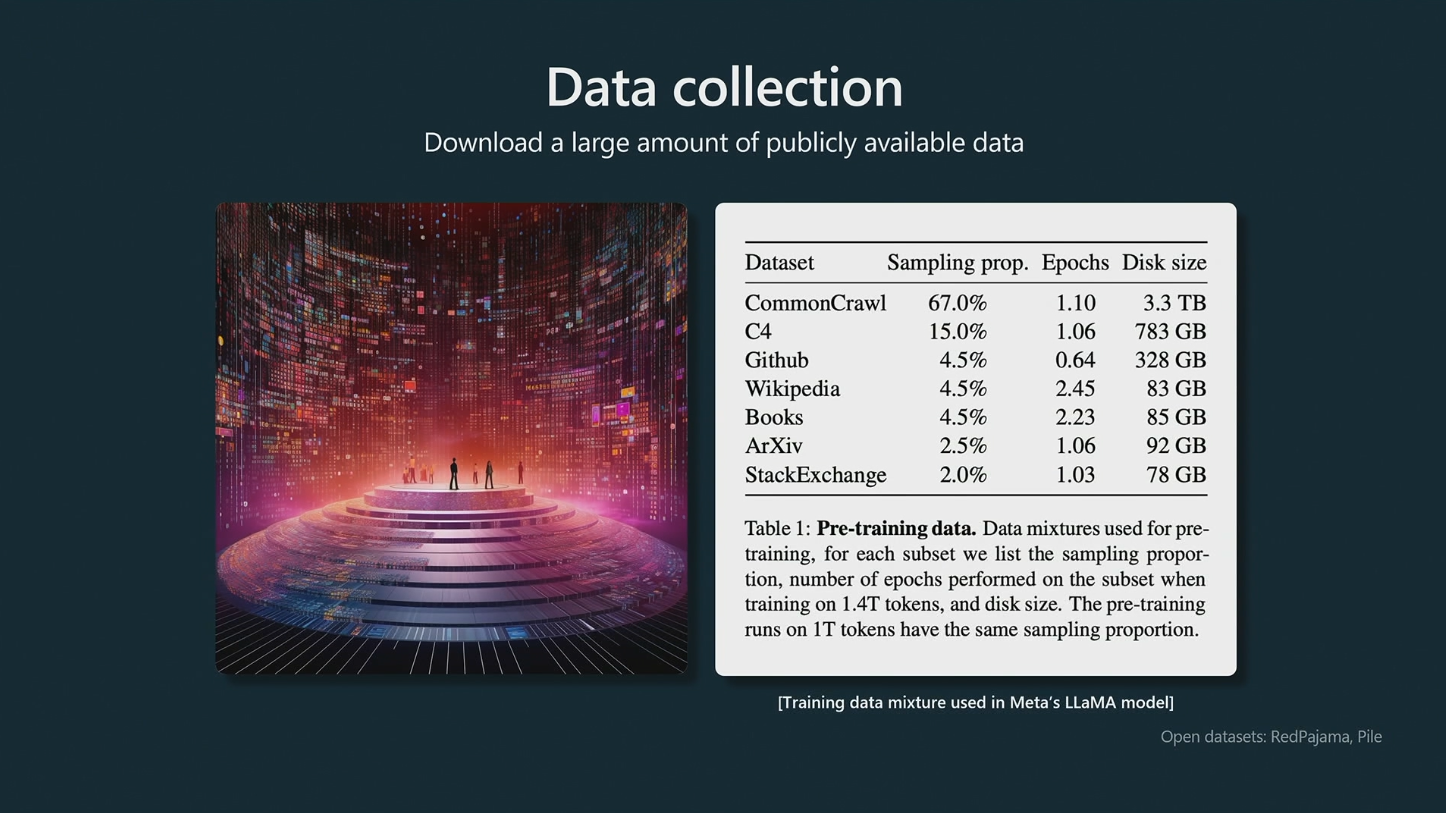

Training data

Figure courtesy of Andrej Karpathy

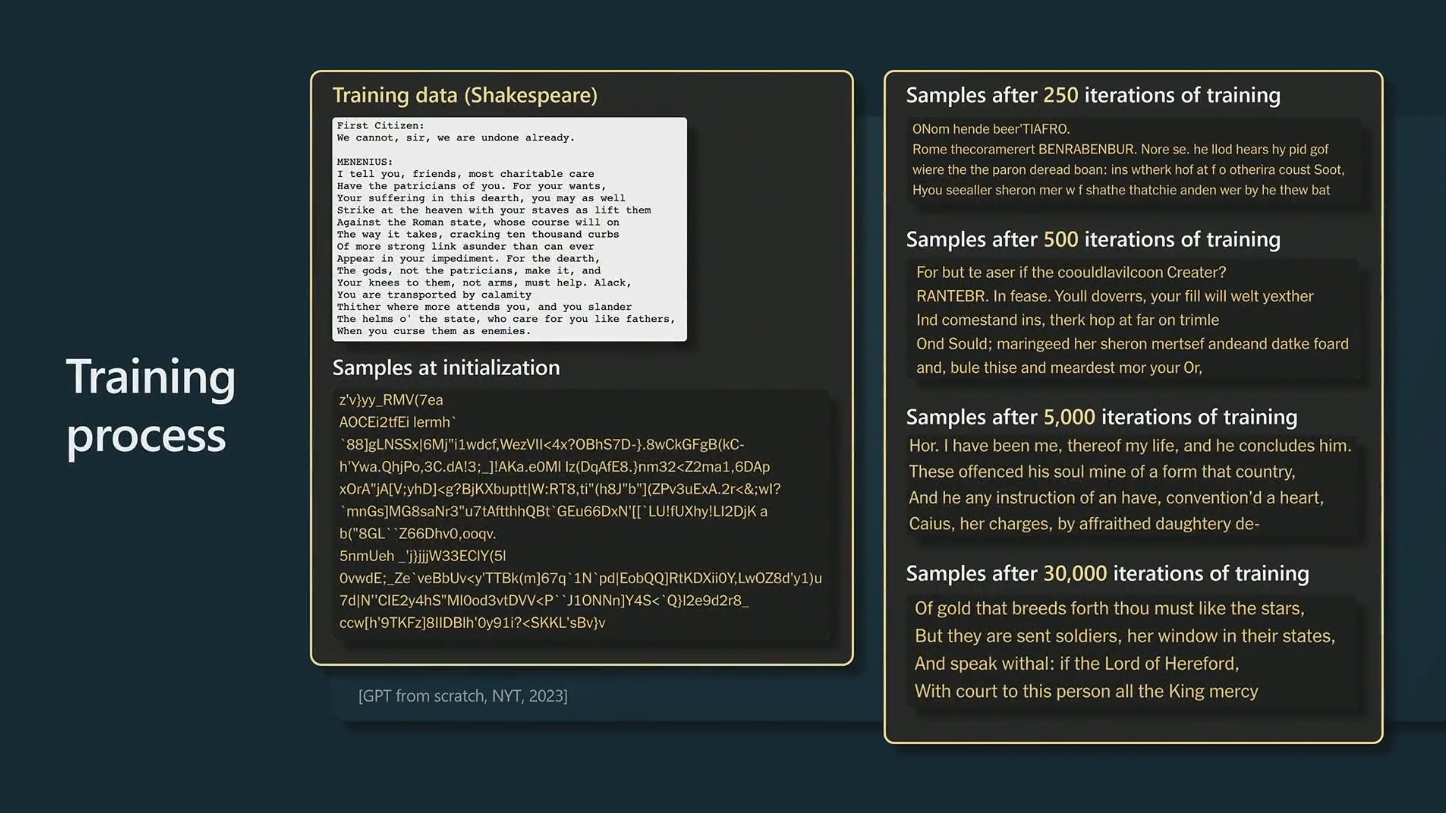

Training process

Figure courtesy of Andrej Karpathy

Emergent abilities

LLMs are thought to show emergent abilities - abilities not explicitly taught. Instead, they emerge as a result of text prediction.

Abilities include:

- performing arithmetic, answering questions, summarizing text, translating, etc.

- zero-shot learning: LLMs can perform tasks without being trained on them.

- few-shot learning: LLMs can perform tasks with few examples.

Emergent abilities

What kind of knowledge does an LLM have to have to be able to write a continuation of the following text?1

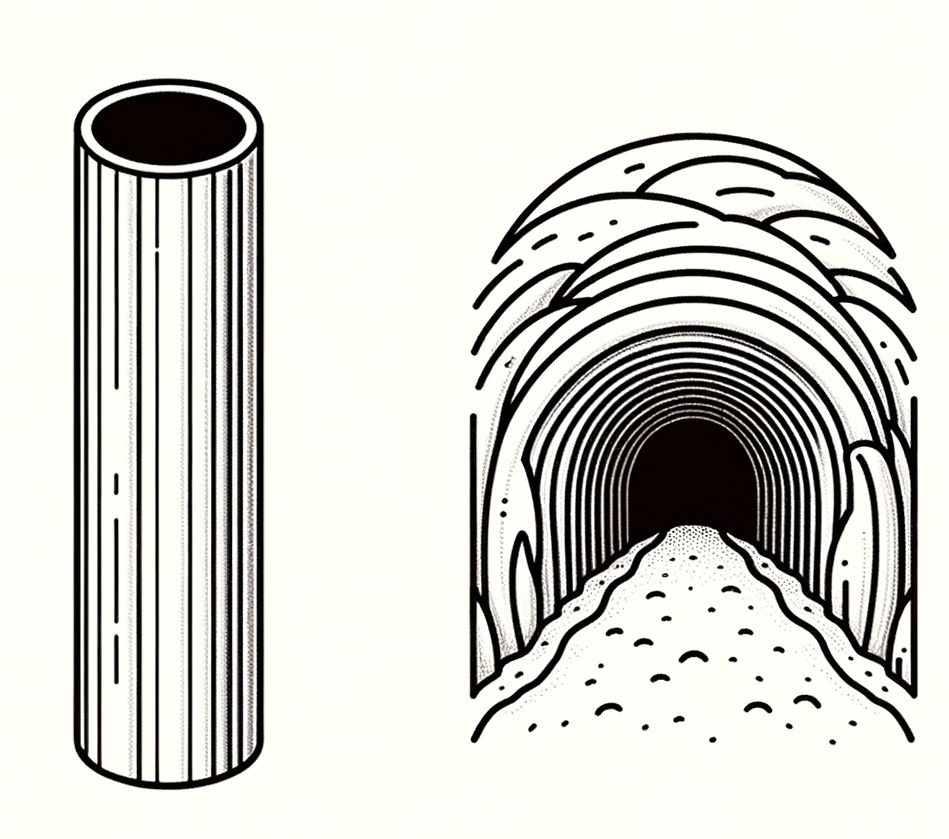

: How many holes does a straw have?

: A straw has one hole. It’s a cylindrical tunnel that runs through the entire length, creating a single continuous space from one end to the other.

: What about a tunnel?

: Similar to a straw, a tunnel can also be considered to have one hole. It’s an elongated pathway carved through an obstruction, allowing passage from one side to the other, thus creating a single continuous space or hole through the material.

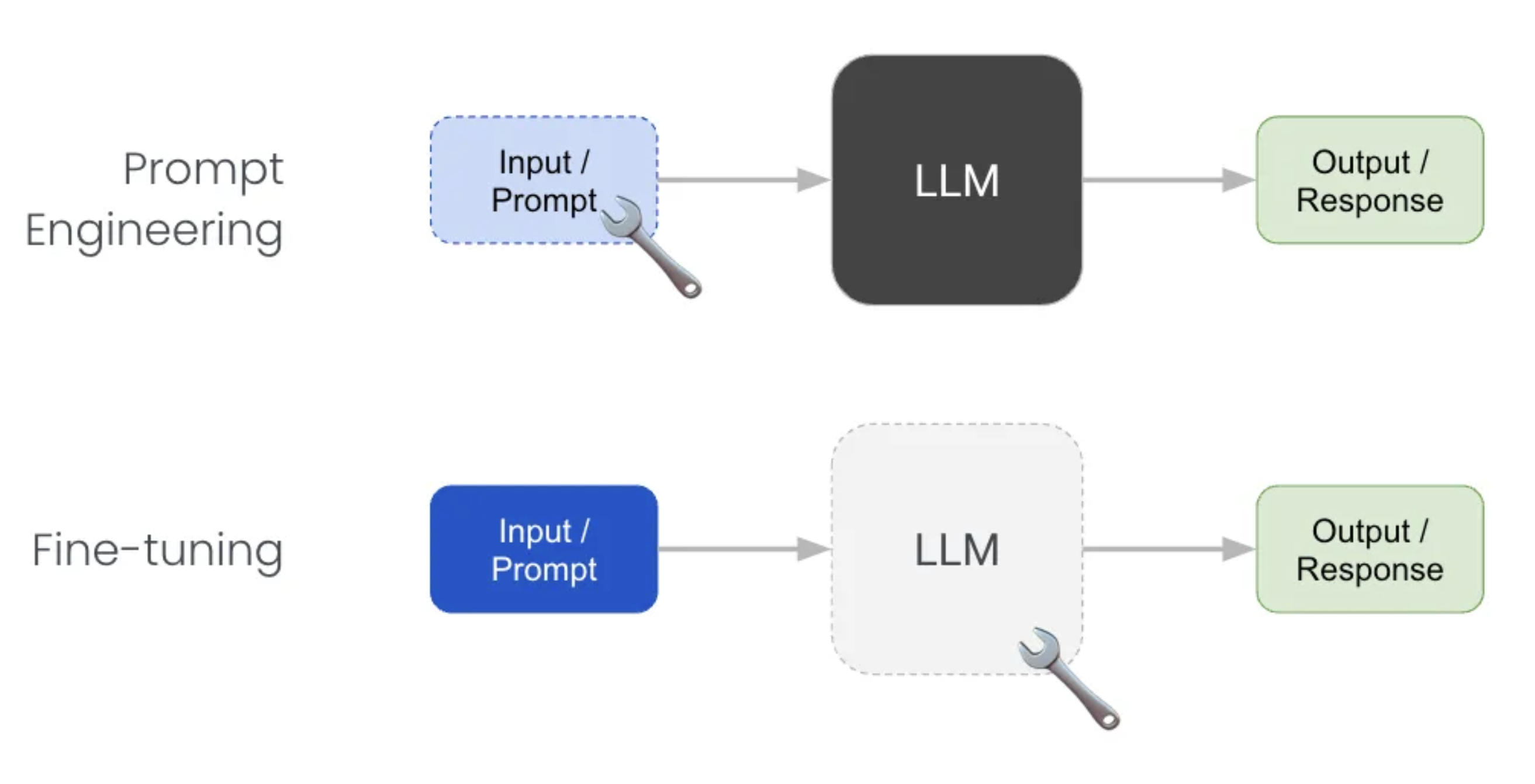

Assistant models

Trained (fine-tuned) in two stages to have conversations: turn-taking, question answering, not being [rude/sexist/racist], etc.

Foundation model has learned to predict all kinds of text, including both desirable and undesirable text.

Fine-tuning is a process narrow down the space of all possible output to only desirable, human-like dialogue.

Model is aligned with the values of the fine-tuner.

Instruction fine-tuning

Reinforcement learning from human feedback (RLHF)

How do Chatbots work?

- Designed to present the illusion of a conversation between two entities.

How do chatbots actually work?

An LLM is a role-play simulator

We can think of an LLM as a non-deterministic simulator capable of role-playing an infinity of characters, or, to put it another way, capable of stochastically generating an infinity of simulacra (Shanahan, McDonell, and Reynolds 2023)

An LLM is a role-play simulator

- An assistant is trained to respond to user prompts in a human-like way.

- A simulator of possible human conversation.

- Has no intentions. It is not an entity with its own goals.

- Does not have a “personality” or “character” in the traditional sense. It can be thought of as a role-playing simulator.

- Has no concept of “truth” or “lying”. The model is not trying to deceive the user, it is simply trying to respond in a human-like way.

Stochastic generation

Hallucination

- LLMs can generate text that is not true, or not based on any real-world knowledge.

- This is known as “hallucination”. A better term would be “confabulation”.

Knowledge base

- A knowledge base is a collection of facts about the world.

- I can

ask(retrieve) andtell(store) facts.

Can an LLM tell the truth?

- How would you know if an LLM is able to give you factual information?

- How would you test this?

: What is the capital of Uzbekistan?

: Tashkent

It looks like the LLM knows the capital of Uzbekistan1.

Confirmation bias

- People tend to search for evidence consistent with their current beliefs.

Are LLMs knowledge bases?

- I can ask but the response is not verifiable.

- I can’t tell, i.e. can’t store new information (expensive/difficult to update with new knowledge).

- LLM can’t tell me where it got its information from.

- LLMs are models of knowledge bases, but not knowledge bases themselves.

But can an LLM think?

- LLMs generate text token-by-token.

- They output a probability distribution over all tokens.

- The “magic” happens here: the learned probability distribution.

- LLMs do not plan ahead.

- LLMs cannot go back and edit their output.

- The output depends on the context.

How do humans think?

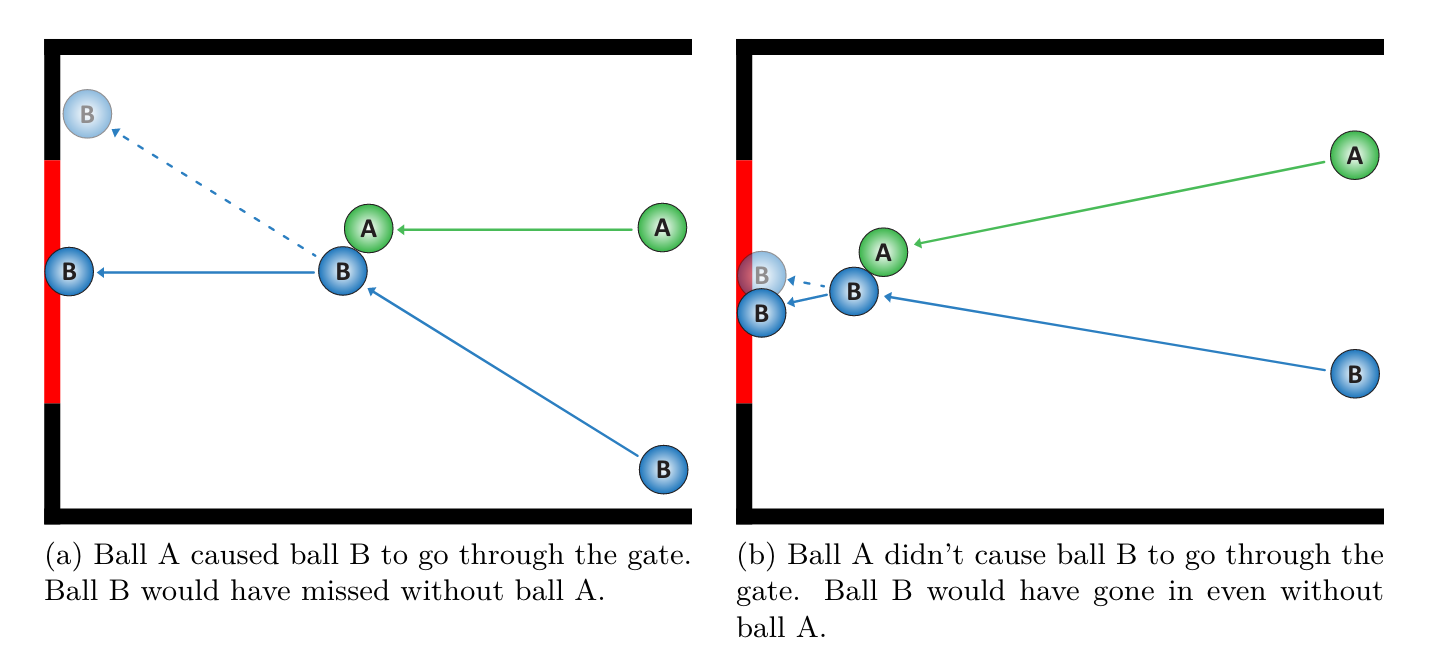

E.g. physical reasoning

Prompting

What is a prompt?

- Remember: the goal of an LLM is complete text.

- A prompt is a piece of text (instruction) that is given to a language model to complete.

PROMPT : Write a haiku about a workshop on large language models.

ASSISTANT : Whispers of circuits,

Knowledge blooms in bytes and bits,

Model learns and fits.

- The response is generated as continuation of the prompt.

Unlocking knowledge

- LLMs learn to do things they were not explicitly trained to do.

- Often, these capabilities need to be “unlocked” by the right prompt.

- What is the right prompt?

- The answer is very similar to what you would tell a human dialogue partner/assistant.

- You can increase the probability of getting the desired output by asking good questions or giving enough information.

Basics of prompting

OpenAI give a set of strategies for using their models effectively:

These include:

- writing clear instructions

- providing reference texts

- splitting tasks into subtasks

- giving the LLM ‘time to think’

- using external tools

Writing clear instructions

- Think of an LLM as a role-playing conversation simulator.

- Instructions should be clear and unambiguous.

- Indicate which role the model (persona) should adopt.

Adopt a persona (role)

: You are an expert on learning techniques. Explain the concept of ‘flipped classroom’ in one paragraph.

: You are an expert financial derivatives. Explain the concept of ‘flipped classroom’ in one paragraph.

Provide reference texts

- Provide a model with trusted and relevant information.

- Then instruct the model to use the provided information to compose its answer.

Provide reference texts

: You will be provided with a document delimited by triple quotes and a question. Your task is to provide a simplified answer to the question using only the provided document and to cite the passage(s) of the document used to answer the question. If the document does not contain the information needed to answer this question then simply write: “Insufficient information.” If an answer to the question is provided, it must be annotated with a citation. Use the following format for to cite relevant passages ({“citation”: …}). Cite only the relevant passage(s) of the document, not the entire document.

““” The flipped classroom intentionally shifts instruction to a learner-centered model, in which students are often initially introduced to new topics outside of school, freeing up classroom time for the exploration of topics in greater depth, creating meaningful learning opportunities. With a flipped classroom, ‘content delivery’ may take a variety of forms, often featuring video lessons prepared by the teacher or third parties, although online collaborative discussions, digital research, and text readings may alternatively be used. The ideal length for a video lesson is widely cited as eight to twelve minutes. ““”

Question: What is flipped classroom?

Giving GPT ‘time to think’

- LLMs generate text one word at a time–the model spends the same amount of computation on each word.

- Giving the model more context gives it more steps to “think”.

- This increases the chances that the model will give a good answer.

- This technique is known as chain-of-thought prompting, and can often be induced by simply instructing the model to

think step-by-steporTake a deep breath and work on this problem step-by-step(Yang et al. 2023).

Chain-of-thought prompting

- Chain-of-thought prompting encourages the LLM to “explain” its intermediate reasoning steps.

- Enables complex reasoning and problem solving.

Instead of this:

: The odd numbers in this group add up to an even number: 4, 8, 9, 15, 12, 2, 1. Yes or no?

Do this:

: Is this statement correct? The odd numbers in this group add up to an even number: 4, 8, 9, 15, 12, 2, 1.

Reason through the problem step-by-step. Start by identifying the odd numbers. Next, add them up. Finally, determine if the sum is even or odd. Write down your reasoning steps in a numbered list.

Zero-shot chain-of-thought prompting

: The odd numbers in this group add up to an even number: 4, 8, 9, 15, 12, 2, 1. Take a deep breath and think step-by-step.

Use Markdown formatting

- Use Markdown to format your prompts.

- Instruct the LLM to format its output using Markdown.

: Improve this haiku:

Words weave through the air,

Minds meld with machine’s deep thought,

Knowledge blooms anew.

It is about about a workshop on large language models. I’m not happy with it.

Show me all the text. Format your edits as **TEXT** and show the deleted text as ~~TEXT~~. Keep you review short (max 100 words).

Try this example in ChatGPT.

Advanced prompting techniques

For more advanced prompting techniques, see these websites:

and explore this activity.

Advanced LLM techniques

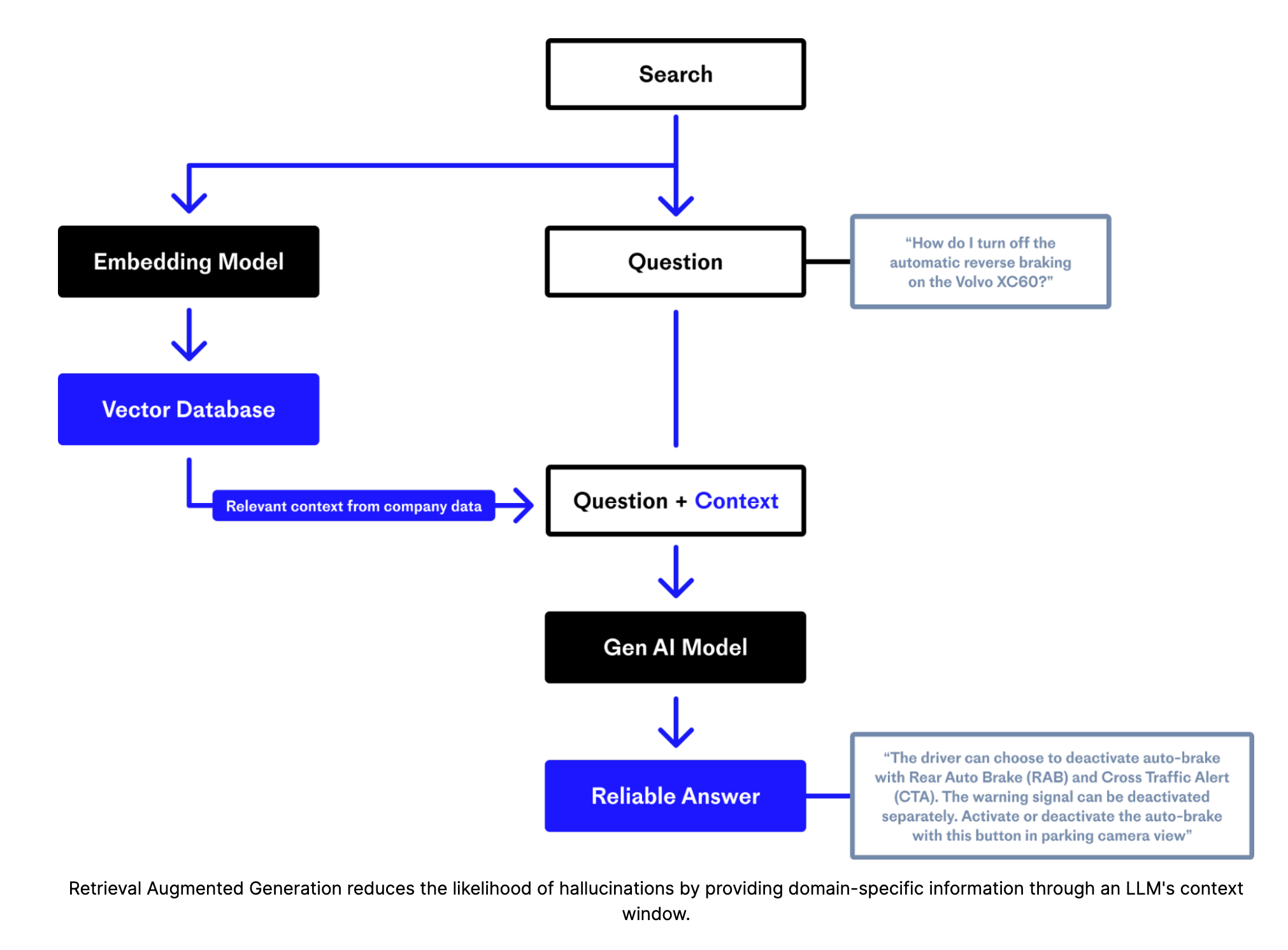

Retrieval-augmented generation (RAG)

Figure courtesy of Pinecone

Web search

- Similar to retrieval-augmented generation, but with web search.

- LLMs can be instructed to use web search to find information.

- Copilot does this automatically - ChatGPT (paid version only) can be instructed to do this.

External tools

- LLMs can be instructed to use external tools to complete tasks.

- For example, an LLM can be instructed to use a calculator to perform arithmetic.

- OpenAI calls this approach function calling.

Multi-agent conversations

- DEMO: haiku writing team

Local models

Hardware requirements:

- Apple Silicon Mac (M1/M2/M3) / Windows / Linux

- 16GB+ of RAM is recommended

- NVIDIA/AMD GPUs supported

What are LLMs good at?

- Fixing grammar, bad writing, etc.

- Rephrasing

- Analyze texts

- Write computer code

- Answer questions about a knowledge base

- Translate languages

- Creating structured output

- Factual output only with RAG or web search